Azure Data Lake Storage Gen2

note

Only supported on Databricks compute

Azure Data Lake Storage Gen2 (ADLS Gen2) is a set of big data analytics capabilities, offered on Azure Blob Storage. ADLS Gen2 allows enterprises to deploy data lake solutions on Azure in order to store petadata bytes of data across many data files.

When we want to read big data such as blob files, log files, IoT data, click streams, large datasets from cloud or write data to cloud we can use this datastore. This contains folders, which in turn contains data stored as files.

Guzzle’s Ingestion activity supports ADLS Gen2 as both source and sink(target) and allows copy data to/from using storage access keys or service principal.

Steps to create Datastore for ADLS Gen2#

Click on the action button (

) from the Datastores section in Left Navigation and select ADLS Gne2 connector. Alternatively user can launch from Create New Datastore link in Activity authoring UI or Copy Data tool

Enter the Datastore name for the new datastore and click Ok

Update the connection name or leave the default. You can refer to Connection and Environments for more details

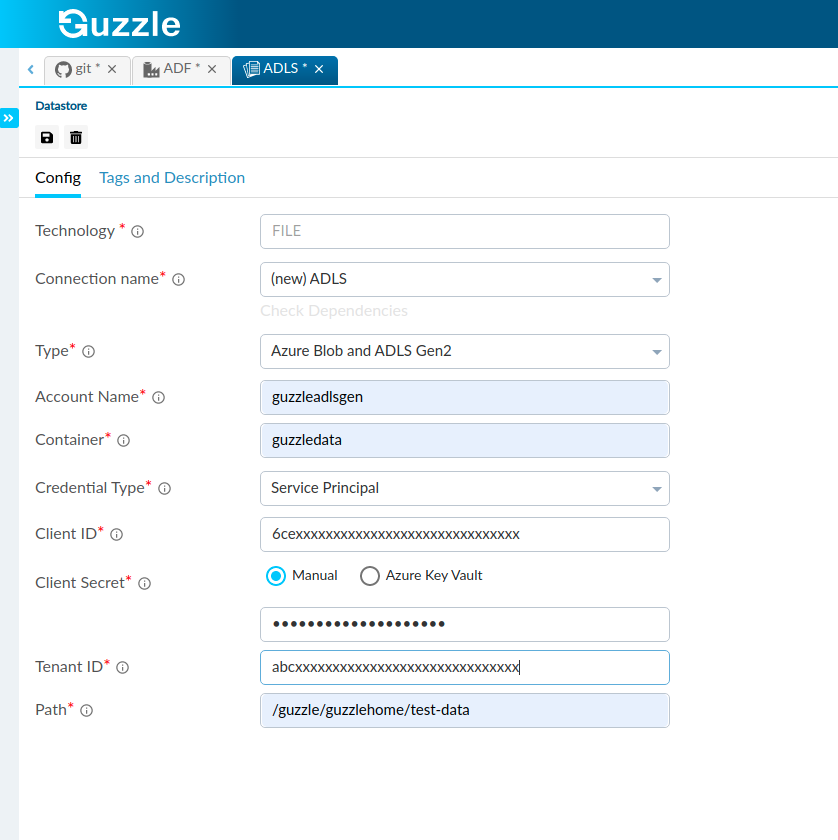

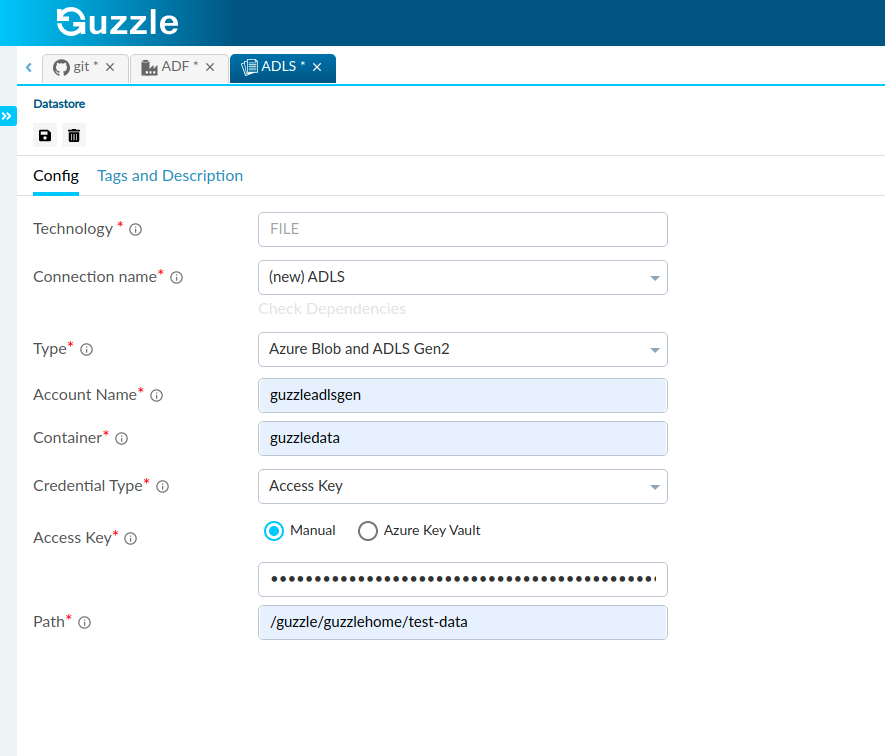

Enter the additional properties for the storage account as described below:

| Property | Description | Required |

|---|---|---|

| Account Name | storage account name | Yes |

| Container name | Name of the ADLS Gen2 Container to connect | Yes |

| Credential Type | Authentication type to use when connecting to ADLS. Two options are provided Service Principal and Access Key. Following mechanisms are supported: Service principal : To uses Service principal which to access selected container or folder in the storage account. Follow the steps at Register your application with an Azure AD tenant to create Application Registration and capture following information Application (client) ID, Client secret, Directory (Tenant) ID. Also ensure following permission: As source: Grant Execute permission for all parent folders, along with Read permission for the files to copy. Alternatively, in Access control (IAM), grant at least the Storage Blob Data Reader role at container or storage account level. As sink target: Grant Execute permission for all parents folders, along with Write permission for the sink folders. Alternatively, in Access control (IAM), grant at least the Storage Blob Data Contributor role at container or storage account level. Access Key: To use storage account access keys to access the data. Provide access key from azure portal which is available in storage account. Recommendation is to use Service principal. | Yes |

| Client ID* | Service principal’s client ID | Yes (for Credential type of Service Principal) |

| Client Secret | Service principal client secret. For providing client secret the following options are available: 1. Manual: Provide client secret directly. 2. Azure Key Vault: To use Azure key vault feature user have to integrate Key Vault with Guzzle for that visit here. Give value of the key vault name and secret name where client secret is stored in Azure Key Vault instance. | Yes (for Credential type of Service Principal) |

| Tenant ID | Directory ID of the service principal | Yes (for Credential type of Service Principal) |

| Access Key | Account access key | Yes (for Credential Type : Access Key) |

| Path | This is the folder path within the ADLS container. You can specify / (root path) to point to entire container | Yes |

- Save the Datastore config. Optionally you can also Test the connection.

Interface for ADLS Gen2 datastore#

Sample config when using Credential Type as : Service Principal

Sample config when using Credential Type as : Access Key

Known Limitation#

--