HDFS

Hadoop Distributed File System (HDFS) is a distributed file system that provides high-throughput access to application data.Guzzle Ingestion activity supports ingesting data from HDFS file system.

note

- Supported only with Local Spark and Yarn

- Ensure Hadoop core site file are set up correctly on the yarn or local spark cluster

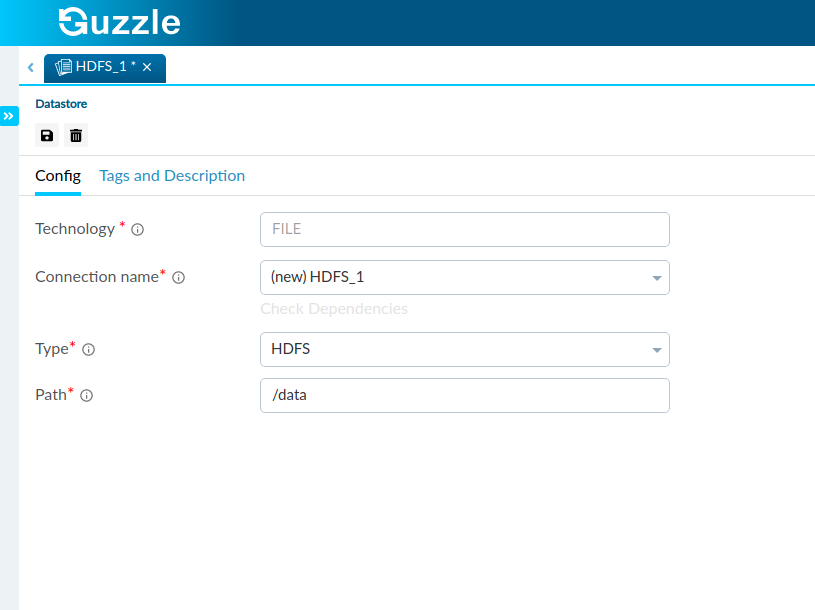

Steps to create Datastore for HDFS#

Click on the action button (

) from the Datastores section in Left Navigation and select Server file system connector. Alternatively user can launch from Create New Datastore link in Activity authoring UI or Copy Data tool

Enter the Datastore name for the new datastore and click Ok

Update the connection name or leave the default. You can refer to Connection and Environments for more details

Enter the Path that shall be used as base directory for reading or writing files

Save the Datastore config. Optionally you can Test the connection.

Interface for HDFS datastore#