Databricks File System

Databricks File System (DBFS) is an abstraction provided by Azure Databricks to seamlessly access cloud object stores like Azure Blob, ADLS Gen2 and Amazon S3. With the ability to mount cloud file object stores as DBFS mounts on Databricks workspace, one can access this object stores seamlessly from Guzzle jobs as well as from Databricks notebook and spark application deployed in this workspace without providing credentials or storage URLs

note

- Only Ingestion activity supports File based connectors.

- Only support with Azure Databricks compute

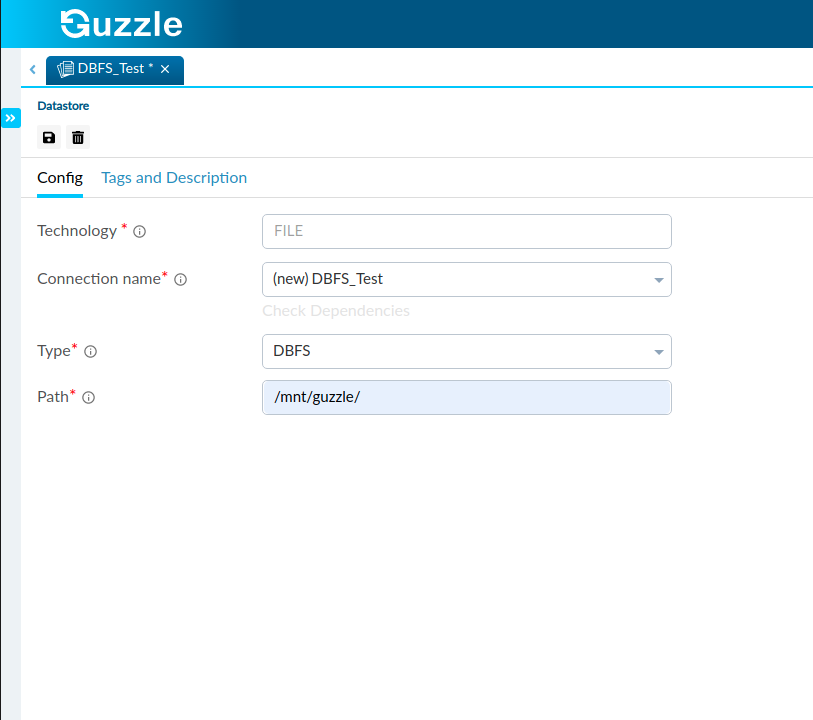

Steps to create Datastore for DBFS#

Click on the action button (

) from the Datastores section in Left Navigation and select DBFS connector. Alternatively user can launch from Create New Datastore link in Activity authoring UI or Copy Data tool

Enter the Datastore name for the new datastore and click Ok

Update the connection name or leave the default. You can refer to Connection and Environments for more details

Provide the root path of the Databricks file system (DBFS) file system. You don’t need to include dbfs:/ prefix.

Save the Datastore config. Optionally you can Test the connection.

Interface for DBFS datastore#