Delta Lake

Delta Lake is an open format storage layer that delivers reliability, security and performance on your data lake. Guzzle has extensive support of Delta tables including following features:

Delta tables are supported by all the activity types of Guzzle namely: Ingestion, Processing, Reconciliation, Check Constraint and Housekeeping

Support for referring Delta tables either as:

Delta paths : example: delta.

/mnt/data1/data/customerdb/customerDelta tables (registered to internal or external metastore): example customerdb.customer

Ability to auto-create the Delta table if it's not present (only applies to Ingestion Module) including auto-creating tables with partition

Ingestion activity supports Append and Overwrite mode (overwrite happens at partition level if the table is partitioned) or at the entire table for non-partitioned tables. Refer to [Working with Delta] for ingesting data.

Processing activity supports Delta target using Template approach or Spark data frame approach. Template approach leverages native DML commands when moving data from one delta table to other delta table using MERGE/UPDATE/INSERT INTO SELECT. Refer to [Working with Delta for Processing]

note

Only supported on Databricks compute

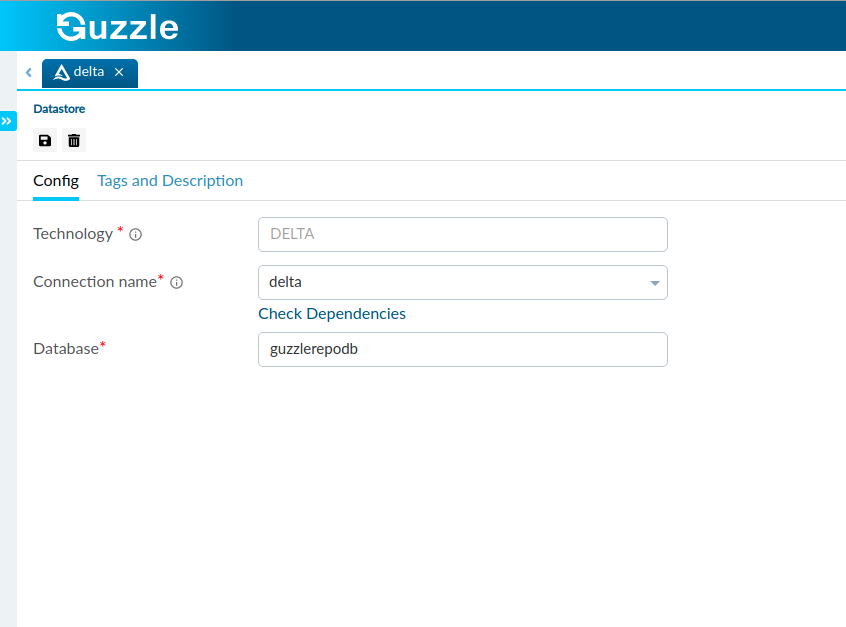

Steps to create Datastore for Delta#

Click on the action button (

) from the Datastores section in Left Navigation and select DELTA connector in Database section. Alternatively user can launch from Create New Datastore link in Activity authoring UI, or Copy Data tool

Enter the Datastore name for the new datastore and click Ok

Update the connection name or leave the default. You can refer to Connection and Environments for more details

Optionally enter the Database. This shall be used as schema name for source or target/reject tables in all the activities if schema name is not provided along with table name. This property is ignored if SQL is used for source or target; schema name prefix is provided along with table name or delta path is used.

Save the Datastore config. Optionally you can also Test the connection.

Interface for Delta Lake Datastore#